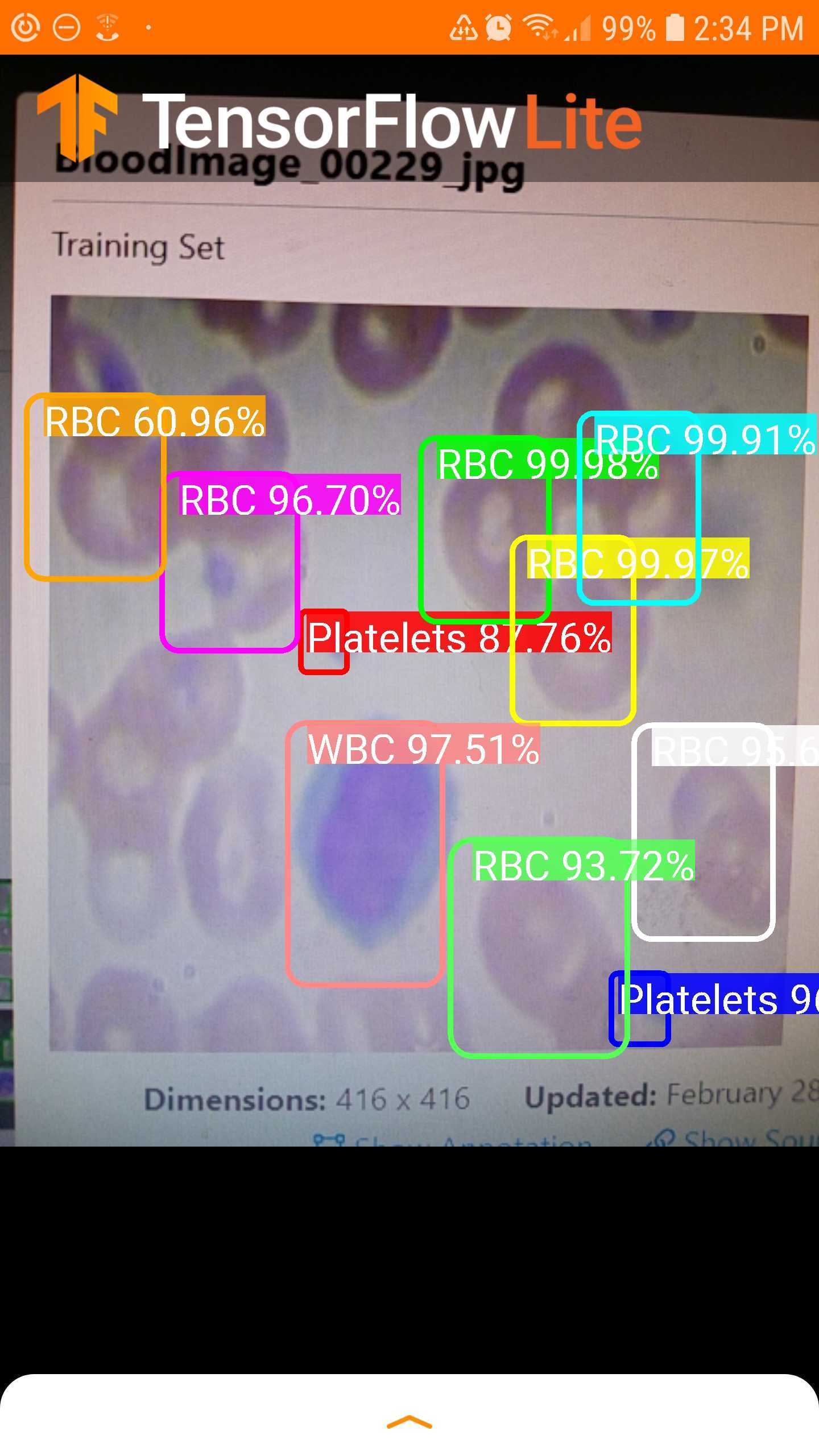

To export your own data for this tutorial, sign up for Roboflow and make a public workspace, or make a new public workspace in your existing account. See this guide on how to label images in CVAT, LabelImg, VoTT, or LabelMe. Note: if you have unlabeled data, you will first need to draw bounding boxes around your object in order to teach the computer to detect them. Luckily, Roboflow converts any dataset into this format for us. TensorFlow models need data in the TFRecord format to train. Luckily, the associated Colab Notebook for this post contains all the code to both train your model in TensorFlow and bring it to production in TensorFlow Lite. Note TensorFlow Lite isn’t for training models. This has many advantages, such as greater capacity for real-time detection, increased privacy, and not requiring an internet connection. TensorFlow Lite is the official TensorFlow framework for on-device inference, meant to be used for small devices to avoid a round-trip to the server. Open up this Colab Notebook to Train TensorFlow Lite Model. If you have an unlabeled dataset, you can learn how to label with best practices with this post on how to label images with CVAT, LabelImg, VoTT, or LabelMe. If you don’t have a dataset, you can follow along with a free Public Blood Cell Detection Dataset. tflite file that you can use in the official TensorFlow Lite Android Demo, iOS Demo, or Raspberry Pi Demo. In this tutorial, we will train an object detection model on custom data and convert it to TensorFlow Lite for deployment.

Whether for mobile phones or IoT devices, optimization is an especially important last step before deployment due to their lower performance.

If you need a fast model on lower-end hardware, this post is for you. In this post, we walk through the steps to train and export a custom TensorFlow Lite object detection model with your own object detection dataset to detect your own custom objects.

0 kommentar(er)

0 kommentar(er)